Cookie notice

This website uses analytical cookies. If that's okay for you, you can click on 'Sure thing'.

SAP BW customers have loved robustness and BEx query flexibility for decades, but the reality is that SAP BW on HANA installations, particularly after migration to cloud-based hyperscalers, have become expensive to run. Main reason is that all enterprise data by default is stored in-memory (hot storage). Take on top, that data is not easily consumable nor shareable outside the SAP Analytics Cloud/Business Object visualization world.

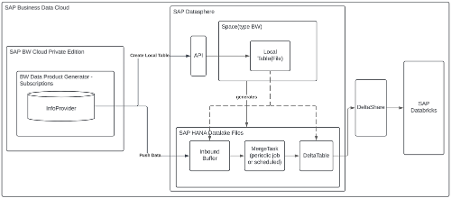

The next gen SAP Business Data Cloud (BDC) platform offers now existing SAP BW customers a better conversion path to an open flexible data architecture (over BW bridge) with BW Product Generator (short BW DPG) where primary storage tiers are now SAP HANA Data Lake files (cold) and Local Tables (warm). If modelled right, there is no longer a need for (hot) data persistency. Take on top that the data is now easily consumable and shareable for any data scenario with data being stored in Delta Table format – see picture below:

I will divide this blog post into two. In the first (this blog post), I will focus on the data aspect and how to trim a SAP BW on HANA to a minimum to improve overall run costs and in the second (blog post to follow) on how to solve the front-end query part when moving to SAP BDC.

Majority of SAP BW on HANA EDWs have organically grown over a period of 10 to 20 years. With a forthcoming conversion exercise, ONE consultants (short, ONE) provide its customers with its complementary “Oxygen tool” to break down aging of its data and evaluate its best strategy to trim data from in-memory (hot) storage. Generally, from its customer cases, ONE sees 3 buckets of BW queries:

Bucket 1: 40% of legacy BW queries are obsolete and can be decommissioned

Bucket 2: 20% of remaining legacy BW queries accounting for 80% of the data

Bucket 3: 80% of remaining legacy BW queries accounting for 20% of the data

Focus will be on Bucket 2 above and for companies to evaluate how to move the related data off its BW. Paths will be different depending on complexity of extractors/transformations in use, and if companies are on an S/4 RISE or S/4 On-Prem implementation journey already. Generally, 3 scenarios play out:

Scenario 1: S/4 journey is still 2+ years away from go-live

Scenario 2: S/4 RISE is live or has been initiated with go live within 1-2 years

Scenario 3: S/4 On-Prem is live or has been initiated go live within 1-2 years

Scenario 1 is where it would be meaningful to consider the SAP BDC BW PDG option where companies get an extension by SAP to 2030 for BW on HANA installations (to 2024 for BW/4 installations) when re-platformed to BW PCE. However, as part of moving to BW PCE, companies want to work with a partner like ONE to ensure its BW on HANA data footprint get scaled back in a significant way and where BW PCE is leveraged short-term to primarily process deltas with a retention period of no more than 30-90 days for the Bucket 2 above. History gets moved to HANA Datalake and the BW queries on top of the InfoProviders get rebuilt via virtual models (views and analytic models) within Datasphere.

Scenario 2 is where BDC would offer SAP RISE customers SAP Data Products out-of-the-box (OOB). With most S/4 RISE customers going clean core, little customization should be required but even where required, SAP Data Products can be copied and used for those customized reporting needs.

Scenario 3 is where the situation hinges most on the S/4 on-prem upgrade approach. If an in-place S/4 upgrade is chosen where legacy customizations are retained, customers would be turning more towards Scenario 1, short-term at least. If an S/4 greenfield with conversion is chosen with a clean core focus, then ONE suggests its customers to leverage S/4 HANA VDM models to generate Custom Data Products for BW rebuild scenarios.

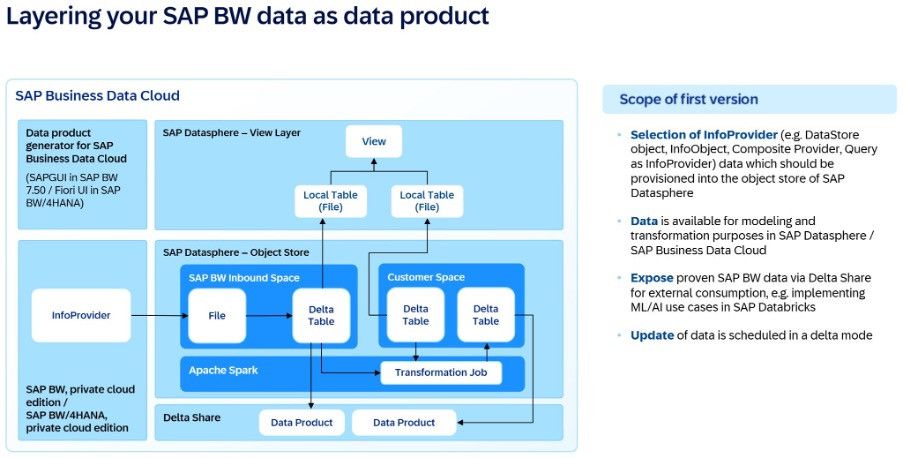

So, how do I work with my BW Data Products once I start transferring daily deltas into HANA Datastore Delta Tables? There are 3 ways to think to think about it based on a companies use case:

Use case 1: Your reporting requirements are aggregated and non-complex in which case you can use the Local Table (type file), in essence a remote table, to start model your use case with appropriate filters and column trimming.

Use case 2: You reporting requirements are more detailed and complex where it may be required to load your Delta Tables (files) into Local Table (warm) in Datasphere but still cost is far cheaper than in-memory.

Use case 3: You need your BW Data Products as part of a more complex transformation with other non-BW data. Here you can use transformation jobs processed directly by the Apache Spark engine for low-cost usage.

Above use cases are from an architecture perspective depicted below:

ONEs experience, working with now 10+ Datasphere customers since 2021, is that batch processing or persisting view outputs are overcompensated for (and costly to companies, as it needs to scale fairly fast on Datasphere CPU and memory) when it often comes down to virtual modelling not following best practices, i.e. absence of filters hitting tables, trimming columns for specific use cases, modelling by exception (i.e. only bring in what you need to drive and join back). ONE has modelled in HANA SQL since 2011 and those best practices have not change with SAP BDC, Datasphere, so important companies have a partner that can QA and sense check when batch processing and view persistence is required.

Follow my second blog post when rebuilding the front-end part of your BW legacy when released shortly.